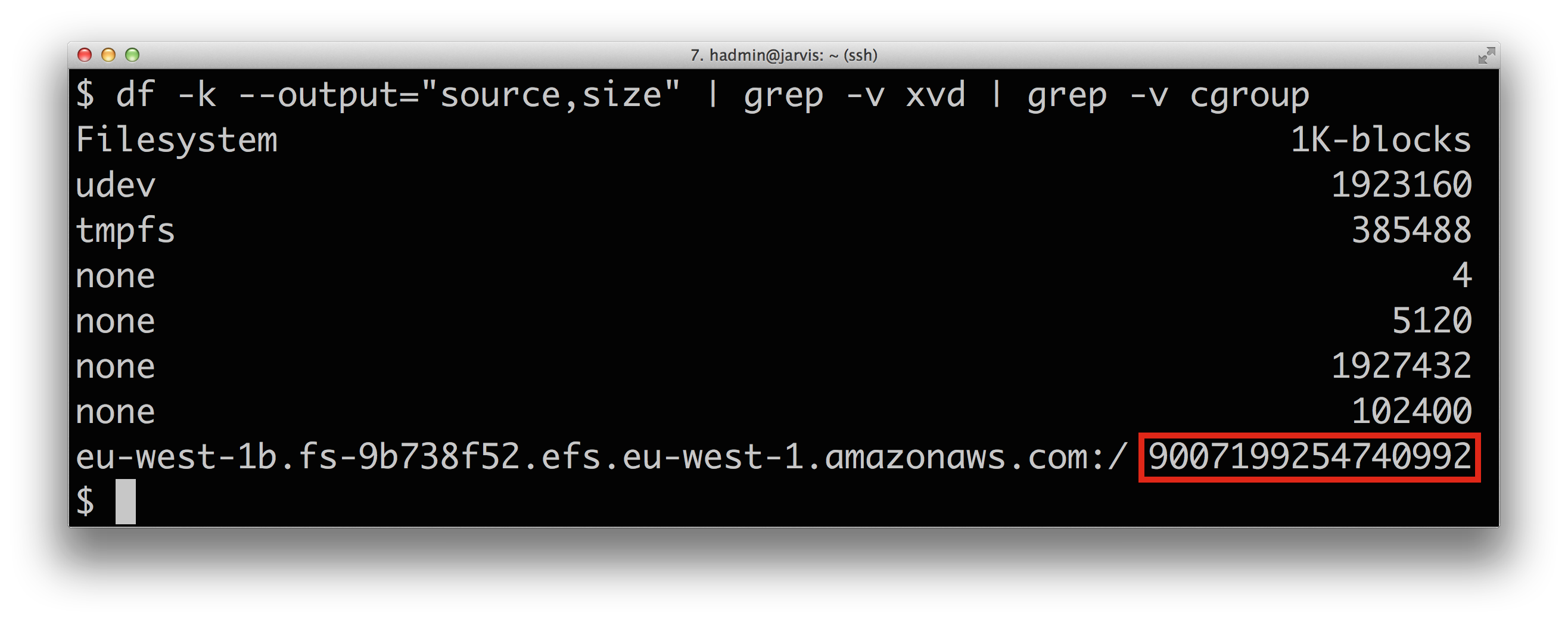

Amazon Web Services launched another first earlier this year in the form of a virtual server (or EC2 instance as they call it) with a staggering 128 virtual CPUs and over 1.9 Terabytes of memory.

The instance, which is an x1.32xlarge in their naming scheme, could cost you as much as $115,000 per year to operate but you could certainly reduce that figure significantly (e.g. $79,000) if you knew ahead of time that you would be running it 24×7 during that time.

In any case, during a recent experiment using one of these instances, we set about trying to find some novel ways to max out the processing power and memory, and here are the two techniques we settled on (with evidence of each of them in action).

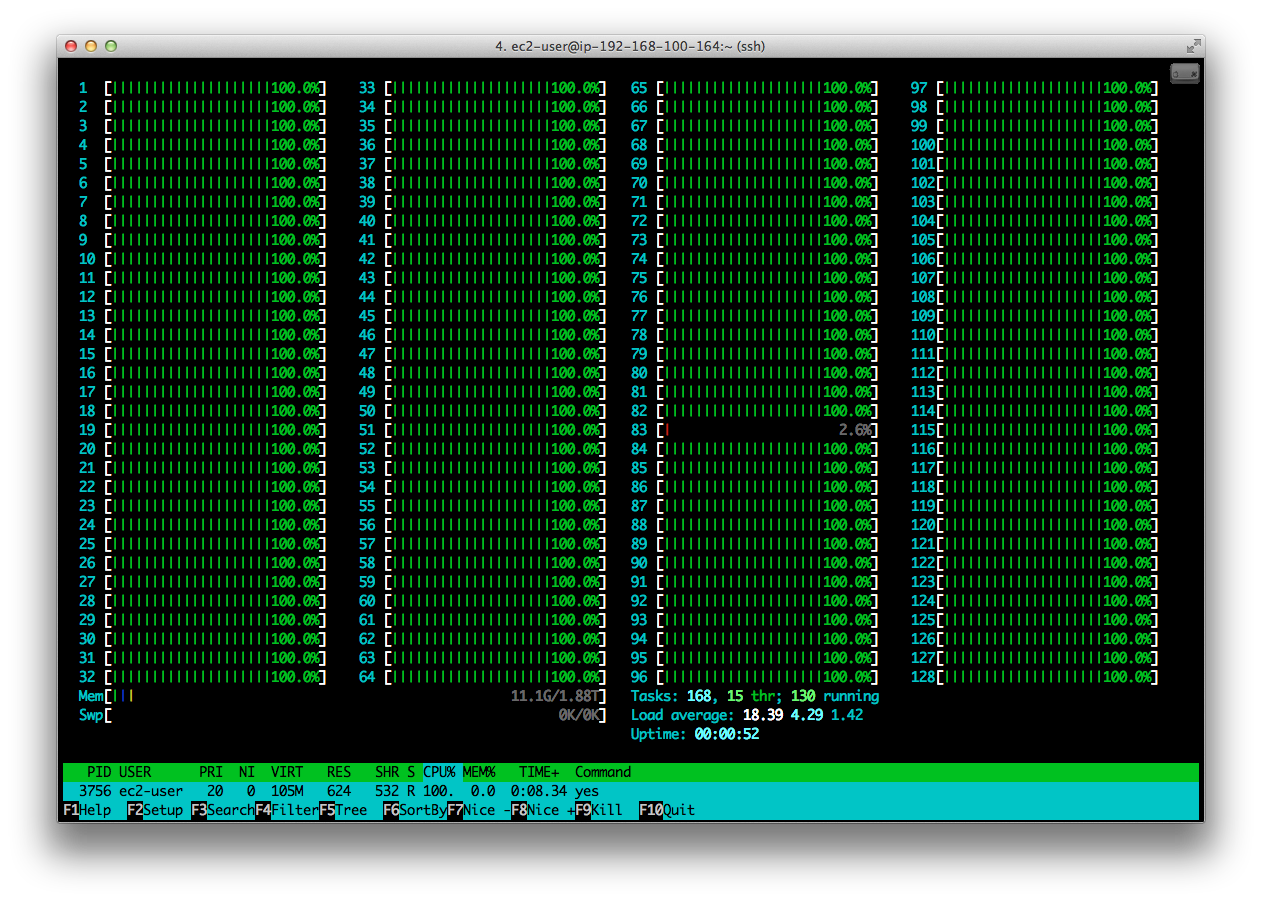

CPU Exhaustion

This was strangely a lot easier than we expected and simply involved using the Unix yes command which, it seems, draws excessive amounts of processing power when used in isolation from it’s normal purpose.

So for our x1.32xlarge instance, with it’s 128 vCPUs, we used the command below to spawn 127 processes each running the yes command and we then monitored it’s impact using the htop command.

$ for i in {1..127}; do yes>/dev/null & done

And here it is in action:

The reason for spawning just 127 processes (instead of the full 128) was to ensure that the htop monitoring utility itself would have enough resources to be able to function, which can been seen clearly above.

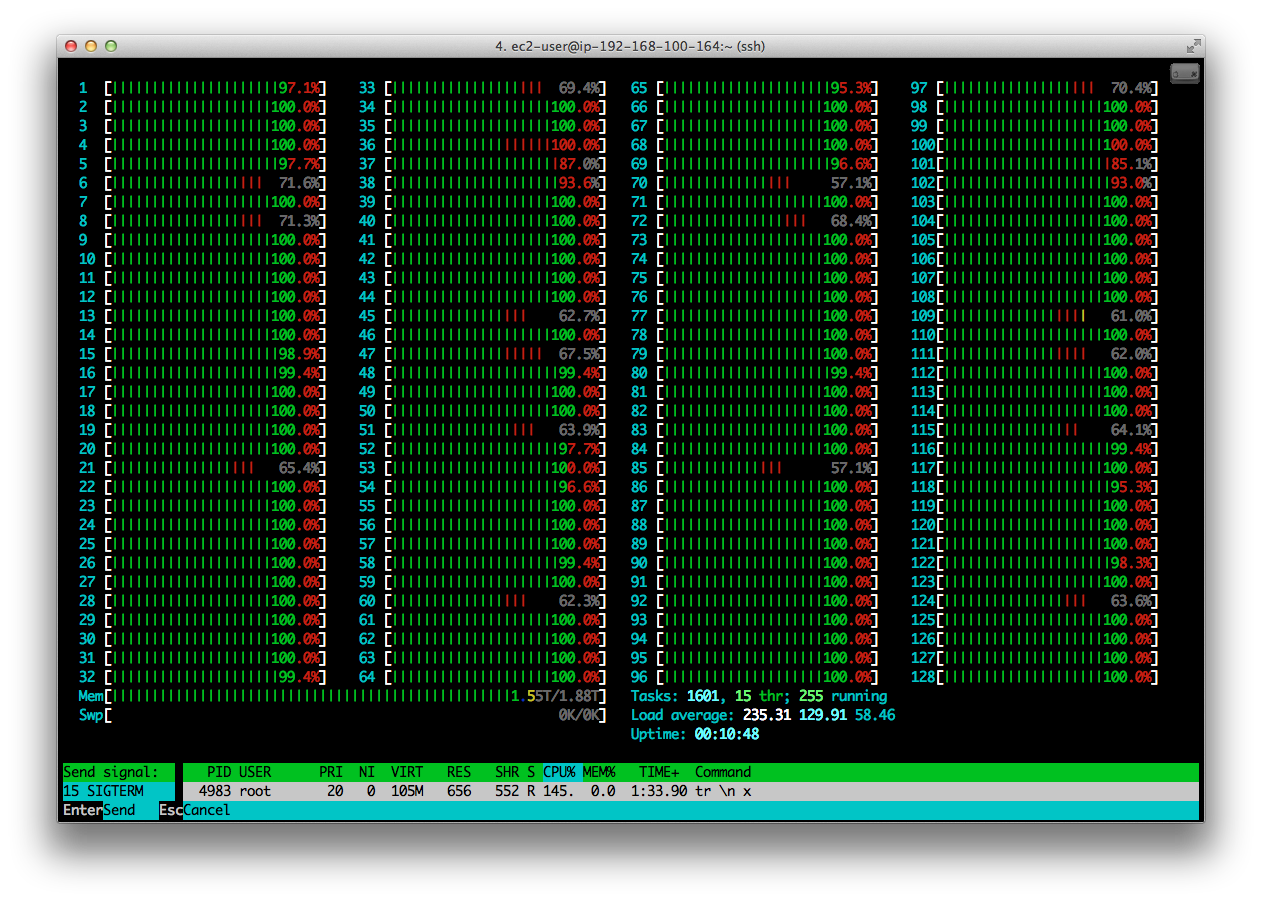

Memory Exhaustion

Exhausting the memory was a little harder (to do quickly) but one of the more hard-core Unix guys came up with this old-school beauty which combines the processor-hungry yes command with some complex character replacements, plus searches for character sequences that will never be found.

$ for i in `seq 1 40`; do cat <(yes | tr \\n x | head -c $((10240*1024*4096))) <(sleep 18000) | grep n & done

And here it is in action too, noting the actual memory usage in the bottom, left:

Note also that the CPU usage, while almost at the limit, is not as clear-cut as before and all processors are being utilised equally (for the most part). Note also the Load Average of 235 (bottom, right of centre) which supports the theory that Unix systems can theoretically sustain load averages of twice the number of processors before encountering performance issues. Some folks believe this to be closed to one times the number of processors but the results above suggest otherwise.

Amazon Web Services X1

The original announcement of the X1 instance type is available at: